C: Parallel Programming¶

Today we have two problems for you to tackle. They both parallelize the pi.c code you developed for day 1. Both programs will need to be compiled at one of the TACC supercomputers.

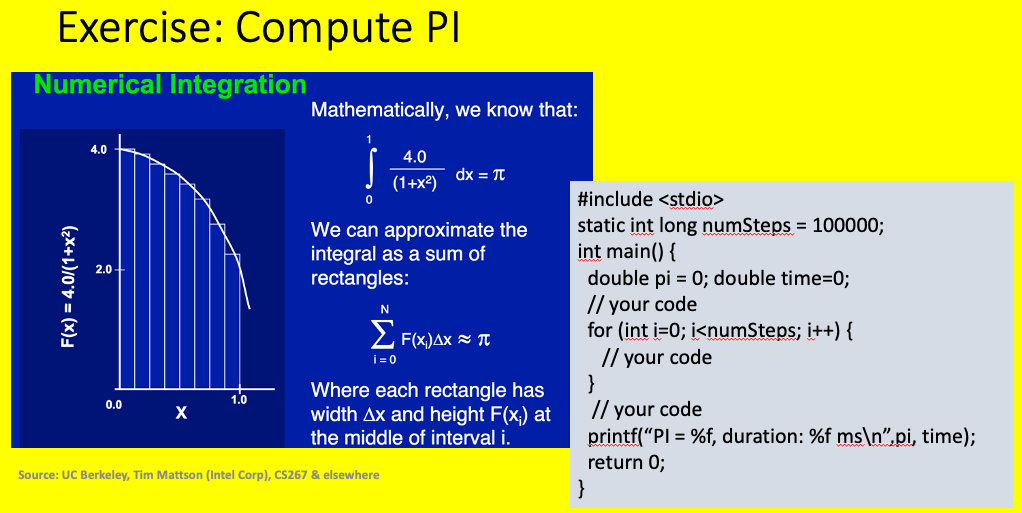

The figure below shows an method to compute pi by numerical integration. We would like you to implement that computation in a C program.

Computation of pi numerically¶

The solution pi.c can be found on github. The contents of that file is presented here:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | #include <stdio.h>

#include <time.h>

#include <math.h>

static long int numSteps = 1000000000;

int main() {

// perform calculation

double pi = 0;

double dx = 1./numSteps;

double x = dx*0.50;

for (int i=0; i<numSteps; i++) {

pi += 4./(1.+x*x);

x += dx;

}

pi *= dx;

printf("PI = %16.14f Difference from math.h definition %16.14f \n",pi, pi-M_PI);

return 0;

}

|

Note

When compiling at TACC if you wish to use gcc as I have done, issue the following command when you login.

module load gcc

When building and testing that the application works, use idev, as I have been showing in the videos.

When launchig the job to test the performance you will need to use sbatch and place your job in the queue. To do this you need to create a script that will be launched when the job runs. I have placed two scripts in each of the file folders. The script informs the system how many nodes and cores per node, what the expected run time is, and how to run the jib. Once the executable exists, the job is launched using the following command issued from a login node:

sbatch submit.sh

Full documentation on submitting scripts for OpenMP and MPI can be found online at TACC

Warning

Our solution of pi.c as written as a loop dependency which may need to revise for the second problem.

Problem 1: Parallelize using MPI¶

You are to modify the pi.c application and run it to use mpi. I have included a few files in code/parallel/ExercisesDay4/ex1 to help you. They include pi.c above, gather1.c and a submit.sh script. gather1.c was presented in the video, and us shown below:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | #include <mpi.h>

#include <stdio.h>

#include <stdlib.h>

#define LUMP 5

int main(int argc, char **argv) {

int numP, procID;

// the usual mpi initialization

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &numP);

MPI_Comm_rank(MPI_COMM_WORLD, &procID);

int *globalData=NULL;

int localData[LUMP];

// process 0 is only 1 that needs global data

if (procID == 0) {

globalData = malloc(LUMP * numP * sizeof(int) );

for (int i=0; i<LUMP*numP; i++)

globalData[i] = 0;

}

for (int i=0; i<LUMP; i++)

localData[i] = procID*10+i;

MPI_Gather(localData, LUMP, MPI_INT, globalData, LUMP, MPI_INT, 0, MPI_COMM_WORLD);

if (procID == 0) {

for (int i=0; i<numP*LUMP; i++)

printf("%d ", globalData[i]);

printf("\n");

}

if (procID == 0)

free(globalData);

MPI_Finalize();

}

|

The submit script is as shown below.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | #!/bin/bash

#--------------------------------------------------------------------

# Generic SLURM script – MPI Hello World

#

# This script requests 1 node and 8 cores/node (out of total 64 avail)

# for a total of 1*8 = 8 MPI tasks.

#---------------------------------------------------------------------

#SBATCH -J myjob

#SBATCH -o myjob.%j.out

#SBATCH -e myjob.%j.err

#SBATCH -p development

#SBATCH -N 1

#SBATCH -n 4

#SBATCH -t 00:02:00

#SBATCH -A DesignSafe-SimCenter

ibrun ./pi

|

One possible solution, which includes multiple approaches, is as shown in the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 | #include <mpi.h>

#include <stdio.h>

#include <stdlib.h>

#include <time.h>

static int long numSteps = 1000000000;

int main(int argc, char **argv) {

int numP, pid;

//

// the usual mpi initialization

//

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &numP);

MPI_Comm_rank(MPI_COMM_WORLD, &pid);

//

// start timer

//

clock_t start_t = clock();

//

// init some variable

//

double pi = 0;

double dx = 1.0/(double) numSteps;

//

// compute processors contribution to pi

//

for (int i=pid; i<numSteps; i+=numP) {

double x = (i+0.5)*dx;

pi += 4./(1.+x*x);

}

pi *= dx;

//

// gather contributions on P0 & sum

//

double *globalSum = 0;

if (pid == 0) {

globalSum = (double *)malloc(numP * sizeof(double) );

}

MPI_Gather(&pi, 1, MPI_DOUBLE, globalSum, 1, MPI_DOUBLE, 0, MPI_COMM_WORLD);

if (pid == 0) {

for (int i=1; i<numP; i++) // 0 as pi already as p0 contribution

pi += globalSum[i];

}

if (pid == 0)

free(globalSum);

//

// end timer

//

clock_t end_t = clock();

double time = (double)(end_t - start_t) / CLOCKS_PER_SEC;

if (pid == 0)

printf("PI = %16.8f, duration: %f s\n",pi, time);

//

// usual termination for MPI

//

MPI_Finalize();

return 0;

}

|

Problem 2: Parallelize using OpenMP¶

You are to modify the pi.c application and run it to use mpi. I have included a few files in code/parallel/ExercisesDay4/ex1 to help you. They include pi.c above and submitPI.sh script. submitPI.sh is as shown:

One possible solution, which includes multiple approaches, is as shown in the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 |

//

// file to compute pi numerically using a number of different approaches

// - demonstrates various OpenMP approaches

#include <omp.h>

#include <stdio.h>

#include <time.h>

static int long numSteps = 1000000000;

int main() {

// perform calculation

double pi = 0;

double dx = 1./numSteps;

double x = 0.5*x;

//

// compute Serially

//

double start = omp_get_wtime();

{

pi = 0;

double sum = 0;

double x = dx*0.50;

for (int i=0; i<numSteps; i++) {

pi += 4./(1.+x*x);

x += dx;

}

pi*=dx;

}

printf("Serial: PI = %16.8f in %.4g sec\n",pi, omp_get_wtime()-start);

//

// Compute in Parallel with false sharing issue

//

start = omp_get_wtime();

int nThreads;

double pSum[32];

for (int i=0; i<32; i++)

pSum[i] = 0;

#pragma omp parallel

{

int tid = omp_get_thread_num();

int numT = omp_get_num_threads();

if (tid == 0)

nThreads = numT;

for (int i=tid; i<numSteps; i+=numT) {

double x = (i+0.5)*dx;

pSum[tid] += 4./(1.+x*x); // line with false sharing issue

}

}

pi = 0;

for (int i=0; i<nThreads; i++) {

pi += pSum[i];

}

pi *= dx;

printf("\nParallel Results: %d Threads\n",nThreads);

printf("Basic False Sharing: PI = %16.8f in %.4g sec\n",pi, omp_get_wtime()-start);

//

// Basic with padded array to remove false sharing

//

start = omp_get_wtime();

double padSum[32][64];

for (int i=0; i<nThreads; i++)

padSum[i][0] = 0;

#pragma omp parallel

{

int tid = omp_get_thread_num();

int numT = omp_get_num_threads();

if (tid == 0)

nThreads = numT;

for (int i=tid; i<numSteps; i+=numT) {

double x = (i+0.5)*dx;

padSum[tid][0] += 4./(1.+x*x); // padSum .. now no longer assesing

// array values next to each other

}

}

pi = 0;

for (int i=0; i<nThreads; i++) {

pi += padSum[i][0];

}

pi *= dx;

printf("Fix Previous with array padding: PI = %16.8f in %.4g sec\n",pi, omp_get_wtime()-start);

//

// Demonstration #omp parallel for reduction

//

start = omp_get_wtime();

#pragma omp parallel for reduction(+:pi) private(x)

for (int i=0; i<numSteps; i++) {

double x = (i+0.5)*dx;

pi += 4./(1.+x*x);

}

pi *= dx;

printf("Reduction: PI = %16.8f in %.4g sec\n", pi,omp_get_wtime()-start);

//

// Replace Reduction with Synchronization section: critical

//

start = omp_get_wtime();

#pragma omp parallel

{

double sum = 0;

double x = 0;

#pragma omp for

for (int i=0; i<numSteps; i++) {

x = (i+0.5)*dx;

sum += 4./(1.+x*x);

}

#pragma omp critical

{

pi += sum;

// OTHER STUFF IF YOU WANT .. NOT TOO MUCH

}

}

pi *= dx;

printf("Synchronization: PI = %16.8f in %.4g sec\n",pi, omp_get_wtime()-start);

return 0;

}

|