Methods in UCSD UQ Engine

Transitional Markov chain Monte Carlo

TMCMC is a numerical method used to obtain samples of the target posterior PDF. This algorithm is flexible, applicable in general settings, and parallelizable. Thus, it can be used to effectively sample the posterior PDF, when the likelihood function involves a computationally expensive FE model evaluation, using high-performance computing (HPC) resources.

In Bayesian inference, the posterior probability distribution of the unknown quantities, represented by the vector \(\mathbf{\theta}\), is obtained by applying Bayes’ rule as follows:

The idea behind TMCMC is to avoid sampling directly from the target posterior PDF \(p(\mathbf{\theta \ | \ y})\), but to sample from a series of simpler intermediate probability distributions that converge to the target posterior PDF. To achieve this, the TMCMC sampler proceeds through a series of stages, starting from the prior PDF until the posterior PDF. These intermediate probability distributions (called tempered posterior PDFs) are controlled by the tempering parameter \(\beta_j\) as

Index \(j\) denotes the stage number, \(m\) denotes the total number of stages, and \(p (\mathbf{\theta \ | \ y})_j\) is the tempered posterior PDF at stage \(j\) controlled by the parameter \(\beta_j\). At the initial stage \((j = 0)\), parameter \(\beta_0 = 0\), the tempered distribution \(p(\mathbf{\theta \ | \ y})_{j=0}\) is just the prior joint PDF \(p(\theta)\). The TMCMC sampler progresses by monotonically increasing the value of \(\beta_j\), at each stage \(j\), until it reaches the value of 1. At the final stage \((j = m)\), parameter \(\beta_m = 1\), the tempered distribution \(p(\mathbf{\theta \ | \ y})_{j = m}\) is the target posterior joint PDF \(p(\mathbf{\theta \ | \ y})\).

TMCMC represents the tempered posterior PDF at every stage by a set of weighted samples (known as particles). TMCMC approximates the \(j^{th}\) stage tempered posterior PDF \(p(\mathbf{\theta \ | \ y})_j\) by weighing, resampling, and perturbing the particles of the \(j-1^{th}\) stage intermediate joint PDF \(p(\mathbf{\theta \ | \ y})_{j-1}\). For details about the TMCMC algorithm, the interested reader is referred to Ching and Chen [Ching2007], Minson et. al. [Minson2013].

Ching and Y.-C. Chen, “Transitional Markov Chain Monte Carlo Method for Bayesian Model Updating, Model Class Selection, and Model Averaging”, Journal of Engineering Mechanics, 133(7), 816-832, 2007.

Minson, M. Simons, and J. L. Beck, “Bayesian Inversion for Finite Fault Earthquake Source Models I-Theory and Algorithm”, Geophysical Journal International, 194(3), 1701- 1726, 2013.

Bayesian Inference of Hierarchical Models

Consider for model calibration a dataset consisting of experimental results on test specimens of the same kind. Let \(\mathbf{y}_i\) denote the measured output response of the \(i^{th}\) specimen in the dataset \(\mathbf{Y} = \{\mathbf{y}_1, \mathbf{y}_2, ..., \mathbf{y}_{N_s}\}\) where \(N_s\) designates the number of specimens. Let \(\theta_i\) be the model parameter vector corresponding to the \(i^{th}\) specimen, where \(n_\theta\) denotes the number of model parameters. For the \(i^{th}\) specimen, the output response \(\mathbf{y}_i\) can be viewed as a function of \(\theta_i\) through the model \(\mathbf{h}\) and the following measurement equation:

where \(\mathbf{w}_i\) denotes the discrepancy between the response predicted by the model parameterized with \(\theta_i\), i.e., \(\mathbf{h}(\theta_i)\), and the experimental output response \(\mathbf{y}_i\); \(\mathbf{w}_i\) is termed the prediction error. With the measurement equation in (), the sources of real-world uncertainties such as the measurement noise during data collection and model form error are lumped and accounted for in the prediction error (also called noise) term \(\mathbf{w}_i\) (i.e., \(\mathbf{w}_i\) is also a proxy for model form error). In quoFEM, it is assumed that the prediction error is a zero-mean Gaussian white noise, thus,

where \(\sigma_i^2\) denotes the variance of the prediction error \(\mathbf{w}_i\).

With the assumptions made in () and (), \(\mathbf{y}_i\) is Gaussian and centered at the model response \(\mathbf{h}(\theta_i)\), with a diagonal covariance matrix equal to \(\sigma_i^2 \times \mathbf{I}_{n_{\mathbf{y}_i} \times n_{\mathbf{y}_i}}\) i.e.,

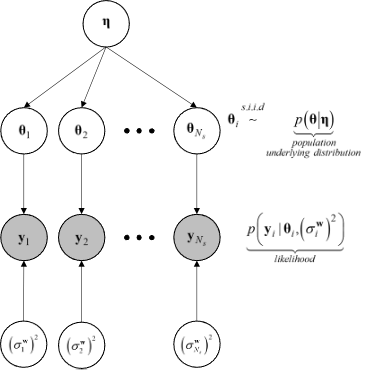

In the UCSD_UQ engine, hierarchical Bayesian modeling is used to account for specimen-to-specimen variability in the parameter estimates. In the hierarchical Bayesian modeling realm, the parameter vectors \(\theta_1, \theta_2, \ldots, \theta_{N_s}\) are considered as mutually statistically independent and identically distributed (s.i.i.d) random variables following a PDF \(p(\theta | \eta)\). This is the PDF of the parent distribution, which represents the aleatory specimen-to-specimen variability.

The objective of hierarchical Bayesian inference is to jointly estimate the model parameters \(\theta_i\) for each specimen i (along with its prediction error variance \(\sigma_i^2\)) as well as the hyperparameters \(\eta\) of the parent distribution of the set \(\theta_i (i = 1, \ldots, N_s)\).

Making use of the experimental results for a single specimen, \(\mathbf{y}_i\), Bayes’ theorem for all parameters to be inferred from this specimen can be written as

The specimen response \(\mathbf{y}_i\) depends solely on the model parameters \(\theta_i\) and the prediction error variance for that specimen, \(\sigma_i^2\), and is independent of the hyperparameters \(\eta\). Consequently, the conditional PDF \(p(\mathbf{y}_i | \theta_i, \sigma_i^2, \eta)\) reduces to \(p(\mathbf{y}_i | \theta_i, \sigma_i^2)\).

Assuming \(\sigma_i^2\) to be statistically independent of \(\theta_i\) and \(\eta\) in the joint prior PDF of \(\theta_i\), \(\sigma_i^2\), and \(\eta\), () becomes

In the context of hierarchical Bayesian modeling, the entire experimental dataset from multiple specimens of the same kind, \(\mathbf{Y} = \{\mathbf{y}_1, \mathbf{y}_2, ..., \mathbf{y}_{N_s}\}\), is considered. The model parameters and the prediction error variances for all specimens are assumed to be mutually statistically independent, while the set of model parameter estimates are assumed to be samples from a parent distribution. The figure figHierarchicalModel shows the structure of the hierarchical model.

Structure of the hierarchical model

Under these assumptions, the equation for Bayesian updating of all unknown quantities in the hierarchical model, including the model parameters \(\Theta = \{\theta_1, \theta_2, \ldots, \theta_{N_s}\}\), measurement noise variances \(\mathbf{s} = \{\sigma_1^2, \sigma_2^2, \ldots, \sigma_{N_s}^2\}\), and hyperparameters \(\eta\) is given by

The marginal posterior distribution of the hyperparameters, \(p(\eta | \mathbf{Y})\) , is obtained by marginalizing out \(\mathbf{\Theta}\) and \(\mathbf{s}\) from \(p(\Theta, \mathbf{s}, \eta | \mathbf{Y})\) as

The distribution \(p(\eta | \mathbf{Y})\) describes the epistemic uncertainty in the value of the hyperparameters \(\eta\) due to the finite number of specimens \(N_s\) in the experimental dataset.

In the hierarchical approach, the probability distribution of the model parameters conditioned on the entire measurement dataset, \(p(\theta | \mathbf{Y})\), is given by

The conditional distribution \(p(\theta | \eta)\) models the aleatory specimen-to-specimen variability. Therefore, the probability distribution \(p(\theta | \mathbf{Y})\), referred to as the posterior predictive distribution of the model parameters \(\theta\), encompasses both the aleatory specimen-to-specimen uncertainty and the epistemic estimation uncertainty (due to the finite number of specimens \(N_s\) in the experimental dataset and the finite length of the experimental data corresponding to each specimen). It can be utilized for uncertainty quantification and propagation in reliability and risk analyses.

Special Case: Normal Population Distribution

The hierarchical modeling approach discussed above captures the specimen-to-specimen aleatory variability by modeling the model parameters corresponding to each experiment as a realization from a population distribution. In the UCSD_UQ engine, a multivariate normal distribution \(\theta | \eta \sim N (\mu_\theta, \Sigma_\theta)\) is adopted (i.e., \(\eta = (\mu_\theta, \Sigma_\theta)\)) as:

In the Bayesian inference process, the hyperparameters characterizing the population probability distribution, the hypermean vector \(\mu_\theta\) and the hypercovariance matrix \(\Sigma_\theta\) are jointly estimated with all the \(\theta_i\) s and \(\sigma_i^2\) s.

So, for this special case, () becomes

with \(\Theta\), \(\mathbf{Y}\), and \(\mathbf{s}\) as defined above.

In the UCSD_UQ engine, the sampling algorithm operates in the standard normal space i.e.,

and the Nataf transform is utilized to map the values provided by the sampling algorithm to the physical space of the model parameters before the model is evaluated.

Sampling the Posterior Probability Distribution of the Parameters of the Hierarchical Model

Due to the high dimensionality (\(n_\theta \times n_s + n_s + n_\theta + n_\theta \times \frac{(n_\theta+1)}{2}\), corresponding to the dimensions of the \(\theta_i\) of each dataset, \(\sigma_i^2\) for each dataset, \(\mu_\theta\) and \(\Sigma_\theta\), respectively) of the posterior joint PDF shown in (), the Metropolis within Gibbs algorithm is used to generate samples from the posterior probability distribution. To do this, conditional posterior distributions are derived for blocks of parameters from the joint posterior distribution () of all the parameters of the hierarchical model. The Gibbs sampler generates values from these conditional posterior distributions iteratively. The conditional posterior distributions are lower dimensional than the joint distribution. The assumptions made next result in a few of the conditional posterior distributions being of a form that can be easily sampled from, thereby making it feasible to draw samples from the high-dimensional joint posterior PDF of the hierarchical model.

The prior distributions for each \(\sigma_i^2\) are selected as the inverse gamma (IG) distribution:

The prior probability distribution for \((\mu_\theta, \Sigma_\theta)\) is chosen to be the normal-inverse-Wishart (NIW) distribution:

With these assumptions, the conditional posterior distributions are the following:

where:

and,

The Metropolis within Gibbs sampler generates values from the posterior joint PDF shown in () using the posterior conditional distributions () through the following steps:

Select appropriate initial values for each of the \(\theta_i\).

Based on the \(k^{th}\) sample value, the \((k+1)^{th}\) sample value is obtained using the following steps:

Generate a sample value of \(\Sigma_{\theta}^{(k+1)}\) from the conditional posterior distribution \(IW(\Sigma_n, m_n)\)

Generate a sample value of \(\mu_\theta^{(k+1)}\) from the conditional posterior distribution \(N(\mu_n, \frac{\Sigma_\theta}{\nu_n})\)

For each dataset, generate independently a sample value of \(\sigma_i^{2(k+1)}\) from the conditional posterior distribution of each \(\sigma_i^2\): \(IG(\alpha_n, \beta_n)\)

For each dataset, generate a sample value of the model parameters \(\theta_i\) from the conditional posterior \(p(\mathbf{y}_i | \theta_i, \sigma_i^2) p(\theta_i | \mu_\theta, \Sigma_\theta)\) with a Metropolis-Hastings step as follows:

Generate a candidate sample \(\theta_{i(c)}\) from a local random walk proposal density \(\theta_{i(c)} \sim N(\theta_i^{(k)}, \Sigma_i^{(k)})\) where \(\Sigma_i^{(k)}\) is the proposal covariance matrix of the random walk.

Calculate the acceptance ratio

()\[a_c = \frac{p(\mathbf{y}_i | \theta_{i(c)}, \sigma_i^{2(k+1)}) p(\theta_{i(c)} | \mu_\theta^{(k+1)}, \Sigma_{\theta}^{(k+1)})}{p(\mathbf{y}_i | \theta_i^{(k)}, \sigma_i^{2(k+1)}) p(\theta_i^{(k)}| \mu_\theta^{(k+1)}, \Sigma_{\theta}^{(k+1)})}\]Generate a random value \(u\) from a uniform distribution on [0, 1] and set

()\[\begin{split}\theta_i^{(k+1)} = \quad &\theta_{i(c)} \quad \text{if} \quad u < a_c \\ &\theta_i^{(k)} \quad \text{otherwise}\end{split}\]

After an initial burn-in period, the sample values generated by this algorithm converge to the target density. The proposal covariance matrix of the random walk \(\Sigma_i^{(k)}\) is selected to facilitate proper mixing of the random walks used to generate sample values of the \(\theta_i\). Within the adaptation duration, a scaled version of the covariance matrix of the sample values of the last \(N_{cov}\) samples (defined as the adaptation frequency in the UCSD_UQ engine) is used, to keep the acceptance rate in the range of 0.2 to 0.5.